I've a Debian 12 server (public IP 85.xxx.xxx.xxx at enp6s0) running a bunch of LXC containers on a network bridge cbr0.

Since the public IP is dynamic I had to setup forward + prerouting rules with dnat in order to have incoming requests reach the containers. Eg. port 80/443 are dnatted to container 10.10.0.1. Here are my nftables rules:

flush ruleset

table inet filter {

chain input {

type filter hook input priority 0; policy drop;

ct state {established, related} accept

iifname lo accept

iifname cbr0 accept

ip protocol icmp accept

ip6 nexthdr icmpv6 accept

}

chain forward {

type filter hook forward priority 0; policy accept;

}

chain output {

type filter hook output priority 0;

}

}

table ip filter {

chain forward {

type filter hook forward priority 0; policy drop;

oifname enp6s0 iifname cbr0 accept

iifname enp6s0 oifname cbr0 ct state related, established accept

# Webproxy

iifname enp6s0 oifname cbr0 tcp dport 80 accept

iifname enp6s0 oifname cbr0 udp dport 80 accept

iifname enp6s0 oifname cbr0 tcp dport 443 accept

iifname enp6s0 oifname cbr0 udp dport 443 accept

}

}

table ip nat {

chain postrouting {

type nat hook postrouting priority 100; policy accept;

}

chain prerouting {

type nat hook prerouting priority -100; policy accept;

# Webproxy

iifname enp6s0 tcp dport 80 dnat to 10.10.0.1:80

iifname enp6s0 udp dport 80 dnat to 10.10.0.1:80

iifname enp6s0 tcp dport 443 dnat to 10.10.0.1:443

iifname enp6s0 udp dport 443 dnat to 10.10.0.1:443

}

}

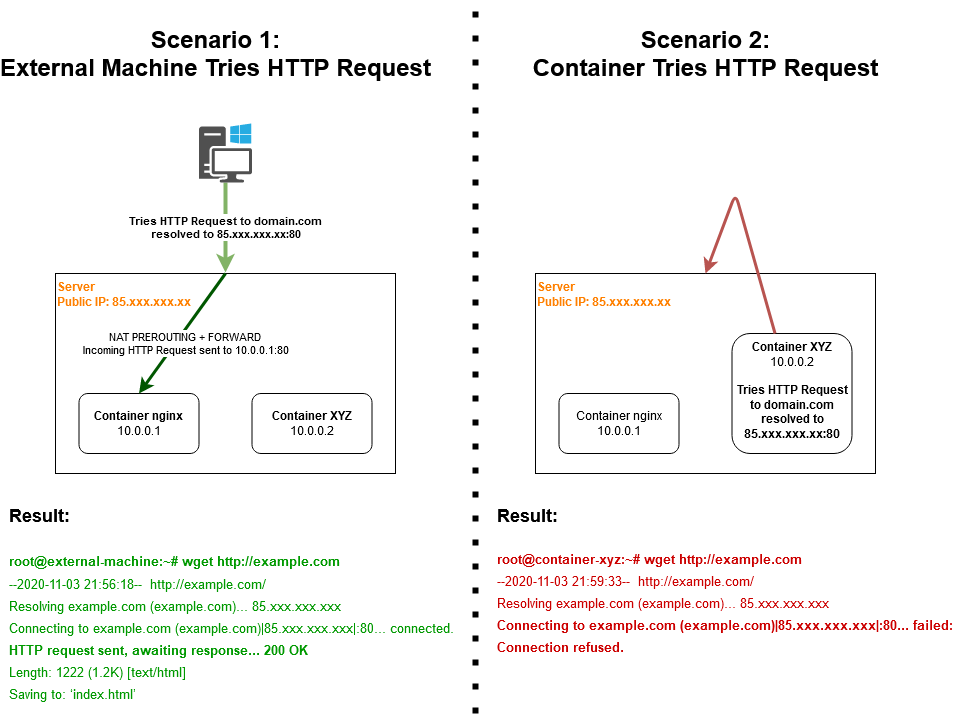

Now the problem is: hairpin NAT. I've multiple containers hosting websites and sometimes it happens some of them need to communicate with other containers using domain names. When they run a DNS query for those domains they'll get the host IP and communication fails:

How can I fix this situation without resorting to DNS hacks?

Is there a way to set nftables to forward request internally while having a dynamic IP? How does the iifname above plays with this?

Thank you.